Throttle bandwidth between the Broker and a remote Agent

Overview

Limiting the data throughput between the Broker and an Agent to a desired speed so that a network is not over-saturated can be done by configuring the number of network connections and the amount of data that is in flight.

Limiting the data throughput is effective in preventing over-saturation only when there is latency between Broker and Agent.

To control or throttle the data throughput, you need to modify the following Agent configuration settings:

- Number of blob threads

- Amount of data sent in each network payload

- Outstanding (prefetch) count

To do this, the following information is required:

- The Round Trip Time (RTT)

- Desired data throughput (bytes per second)

See the Calculating RTT section for instructions on obtaining the required information. See the Step-by-step guide section for instructions on modifying Agent configuration settings.

Calculating RTT and Desired Data Throughput

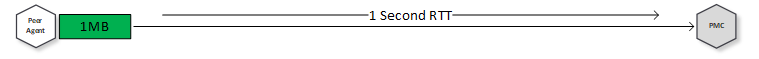

The diagram below illustrates how data throughput can be easily controlled when there is latency between the Broker and Agent.

From this diagram, we can see that there is a RTT of 1 second. This means that it takes a second to send any payload over the link and receive an acknowledgement. So, if a single connection is used, whatever the payload size is will be the performance (measured in bytes per second). In this example, the payload is 1 megabyte, meaning that the performance is 1 MB/s.

To achieve more than this, we can add more connections or increase the payload size; to reduce this, we can reduce the payload size.

Using these simple numbers, we can make the following calculation:

Perf = Payload x Connections / RTT

1 MB/s = 1 MB x 1 / 1Now if we apply the same logic to a connection with 100ms of latency, four connections, and 256 KB payloads:

Perf = 256 KB x 4 / 0.1 = 10 MB/sWhen the desired throughput is known, we can rearrange the above formula to give us:

Connections = Perf x RTT / PayloadOr:

Payload = Perf x RTT / ConnectionsStep-by-step guide

There are two methods for configuring an Agent to have limited data throughput: either manually or by using the Getperf tool provided by Peer Software. The Getperf tool is the preferred method.

Method 1: Use the Getperf tool

The Getperf tool is available in the tools subfolder of the Peer Management Center installation directory and the tools subfolder of the Peer Agent installation directory.

Step 1. Run the following command on the PMC/Broker server within a command prompt or a PowerShell console:

getperf.exe -H -p <port> -u- Port is a TCP port that is connectable between the Agent and Broker and is not a privileged port or already in use. If Peer services are running, avoid using ports 61616 or 61617.

- The option -u will update the Broker configuration for best performance and can be omitted if this command has already been run before.

Step 2. Run the following command on the Agent server within a command prompt or PowerShell console:

getperf.exe -a <broker IP> -p <port> -b <bandwidth> -l -x -S -u- The port must match on both ends.

- Bandwidth can have K, M, G, B or b multipliers (Kilo, Mega, Giga, Bytes, bits).

- The option -x will run an express analysis.

- The option -l means that a limit or throttle is the objective.

- The option -S will perform the analysis without streaming data.

Output:

Getting network bandwidth.|Update complete

Required Job transfer size:1024KBStep 3. Restart the Agent service. If this is the first time that getperf has been run on the PMC/Broker server, then restart the Broker service too.

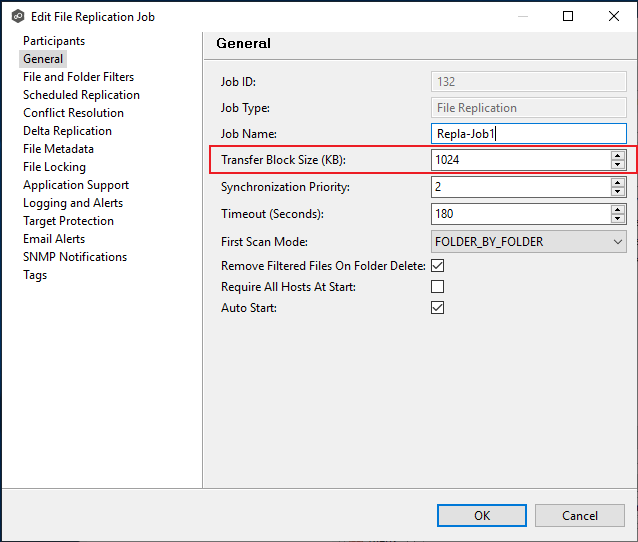

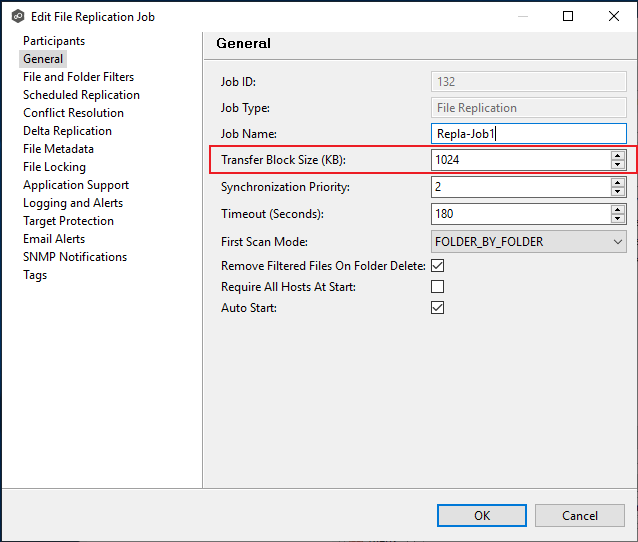

Step 4. In the PMC, edit each job that uses this Agent, modifying the Transfer Block Size (KB) setting to match the outputted Required Job transfer size.

Method 2: Manually modify the Agent configuration

Step 1. On the Agent server, run the following command within a command prompt or PowerShell console to obtain the RTT:

ping <Broker IP>The output will be similar to this:

PING 172.26.80.39 (172.26.80.39) 56(84) bytes of data.

64 bytes from 172.26.80.39: icmp_seq=1 ttl=64 time=19.618 ms

64 bytes from 172.26.80.39: icmp_seq=2 ttl=64 time=19.427 ms

64 bytes from 172.26.80.39: icmp_seq=3 ttl=64 time=19.462 ms

64 bytes from 172.26.80.39: icmp_seq=4 ttl=64 time=19.561 msStep 2. Using the formula above, obtain the number of connections required, using 2 MB as the payload size

Step 3. If the number of connections required from Step 2 is less than 4, halve the payload size and repeat Step 2. Repeat until the number of connections are 4 or more (round down).

Step 4. Often this will be adequate to make the configuration change; however this can sometimes overshoot the desired performance. If a more a more accurate result is required, use the payload calculation above to fine-tune the payload size. In this calculation, use the number of connections from the previous step.

Step 5. Use one of the following methods to modify the configuration of the remote Agent:

Method 1

On the Agent server, run the configuration utility updatecfg.exe. This tool is available in the tools subfolder of the Peer Agent installation directory.

updatecfg.exe -t <connections> -p 1Method 2

On the Agent server, modify the following settings in the Agent.properties file:

jms.broker.syncSends=false

jms.broker.bufferSize=2097152

jms.blob.command.concurrency.num.threads=<connections>

jms.blob.command.prefetch.size=1Step 6. Restart the Agent service. If this is the first time that getperf has been run on the PMC/Broker server, then restart the Broker service too.

Step 7. In the PMC, edit each job that uses this Agent, modifying the Transfer Block Size (KB) setting to match the calculated payload size above.

Note: PMC job transfer sizes are in KB, so divide the payload size by 1024.